The ‘Grantee Voices’ series features contributions from our remarkable grantees. This article was written by Dr. John K. Webb, who is a Professor of Astrophysics at the University of Cambridge.

We see the world around us in a constant stage of change, at scales both large and small. Stellar nurseries create new stars that serve fundamental life-enabling functions, then eventually die. Planets are ubiquitous, but they are not immortal either. Human beings, during their brief lifespans, strive to change the world they inhabit.

Essentially nothing in human experience seems eternal, and yet our most widely used theories of physics are built on assumptions of immutability. There is, of course, a perfectly good reason: simple assumptions make the development of predictive mathematical theories easier, while complex assumptions make weak foundations, and in some cases undermine predictability entirely.

Our best theory of gravity, Einstein’s General Relativity, contains the most audacious constancy assumption in the history of science— the “Einstein Equivalence Principle”. A simplified wording of the EEP is that measurements derived from any kind of physics experiment do not change with time, nor do they vary from place to place in the universe, nor do they depend on the velocity of the apparatus. In other words, the EEP conjectures that all of physics is the same everywhere, and always has been.

Without this assumption, Einstein could not have created his 1916 masterpiece. None of the quantities in Einstein’s work depend on time or location—if they did, the theory would be far more complicated, with, at best, a broad landscape of predictions.

Paul Dirac’s “Large Numbers”

However, in a short letter to the journal Nature in February 1937, Paul Dirac expressed his fascination with an interesting “coincidence” formed by comparing the ratio of forces between an electron and a proton, with the age of the universe (calculated in appropriate “atomic” units). These two numbers, Dirac argued in his “Large Numbers Hypothesis” (LNH), were both huge and of the same magnitude (around 1039). The unlikely coincidence of dividing one by the other and giving a value of unity implied a new “law of Nature”.

However, to maintain this new magic ratio at unity throughout the history of the universe would require one or more of the numerical values to change with time, leading Dirac to speculate that the gravitational constant, G, was not constant at all, but decreased with increasing time since the Big Bang. The idea also seemed to provide an explanation for the extreme weakness of gravity compared to other forces (gravity is 1036 times weaker than electromagnetism)—it had been gradually decreasing for a very long time!

We now know that Dirac went down the wrong path with his varying G theory. Nevertheless, we still have no first-principles explanation for his “large numbers” nor the associated coincidence. Despite these things, Dirac’s idea spawned a new field of research. Post-LNH theories abound as to plausible (even likely) physical mechanisms that might describe a slow cosmological drift in fundamental constants, many intimately tied to the quest for a unified theory.

Searching for a new physics

Our pursuit of a unified description of Nature’s forces hints at a remarkable property of the universe: theories of this kind only appear viable if described in many dimensions beyond the usual three. Forces of Nature may originate in these additional dimensions rather than in the familiar space in which we exist. If so, the constants we perceive are mere shadows of the true values. Any subtle evolution of the scale of these extra dimensions would appear in our world as observable changes of our constants.

Additional dimensions aside (there is, at this stage, no direct evidence for their existence), seemingly immutable constants could be influenced, or even wholly determined, by spontaneous events during the universe’s infancy. That process injects an inherent randomness into their values, potentially rendering them variable across different regions of the cosmos.

Today, searches for new physics are even more compelling than Dirac could have imagined. Fifteen years after his death, two independent teams measuring distant supernovae stumbled across one of the weirdest discoveries of all time: the expansion of the universe is actually speeding up (Nobel Prize, 2011). Interpreting those observations using today’s “standard cosmological model”, as derived from Einstein’s relativity, requires the universe to be pervaded with a “dark energy” that inflicts a repulsive force on the universe as a whole. Moreover, this mysterious dark component amounts to around 3/4 of the total energy density of the universe.

We cannot see this dark energy, and we have no idea, in any fundamental sense, what it actually is. Some theoretical modelers have proposed that the dark component (like everything else) might evolve with time, and some interaction between dark energy and electromagnetism would lead to corresponding variations in fundamental “constants.” Consequently, one of the major science drivers for the construction of forthcoming facilities such as the European Southern Observatory’s “Extremely Large Telescope,” is to find out whether fundamental constants of Nature might vary in time or in space. Such a discovery would, quite literally, revolutionize physics.

A new collaboration is born

Against this backdrop, in 1998, I had an interesting (and, as it turned out, very fruitful) discussion with my colleague Victor Flambaum. It had occurred to me that there might be a way of getting more accurate measurements of the “fine structure constant,” α, in remote regions of the universe. α is a number involving the electron charge, the speed of light, Planck’s constant, and the permittivity of free space. Physically, α provides a useful measure of the strength of the electromagnetic force. Victor was also extremely excited by the prospect of dramatically more accurate α measurements. Thus a strong collaboration was born, active to this day.

The problem was, at the time, we had no access to the best-available astronomical data of the day (from the Keck telescope). Nor did we have sufficiently accurate laboratory data to compare against the astronomical data. But that was to change quickly. Undeterred, we developed a new technique that we called “the Many Multiplet Method,” now used worldwide. Although the calculations are complicated, the idea was relatively simple.

By the light of quasars

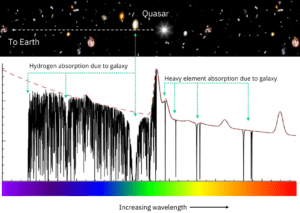

Quasars are the most powerful known light sources at cosmological distances. Thought to be formed from a central black hole, fueled by material residing in a surrounding accretion disk, with an overall size no bigger than our solar system, these objects can be seen out to 13 billion light years, probing the universe when it was only 0.8 billion years old (according to the current age estimate).

The quasar-Earth sightline inevitably intersects gaseous regions in and around fledgling galaxies, imposing absorption patterns on the background quasar light. The absorption pattern reveals numerous elements (e.g. iron, magnesium, silicon, carbon, and many others) in these remote galaxies, forming a unique signature of their contents.

If the physics in one such fledgling galaxy is slightly different than today on our planet, we might detect this by comparing the absorption patterns of remote elements with the same elements found here on Earth.

The Many Multiplet Method was able to capture this effect with far greater accuracy than before because it permitted us to study electrons (for the first time, in these gaseous regions) in their ground state, which in classical terms means “closer” to the nucleus, where any slight change in the electromagnetic force would be felt most strongly.

A big assist from artificial intelligence

Fast-forward 25 years. Until very recently, many PhD theses have focused on the analysis of only a single quasar! Now however, the situation has changed. In 2017, the first application of Artificial Intelligence to spectroscopic analysis took place, and in 2020, a new more efficient and more effective code arrived. A supercomputer calculates multiple independently derived models, something a human cannot do alone.

The system, called AI-VPFIT, is formed from a unified merger of a genetic algorithm and a sophisticated nonlinear least squares method. A calculation taking a few hours now can achieve what a highly competent PhD student would have taken a year to do. Having a system like this allows us to go back and check the reliability of previous analyses. In particular, we can critically examine the impact of approximations or methods on a measurement of α. Previous interactive analyses took so long to make that it was impossible to quantify systematic errors adequately.

Overall progress has been good – at the present time, we can be confident that alpha does not change much more than a few parts per hundred thousand, over the past, say 10 billion years. Hints of new physics persist in the data but nothing has popped up above the canonical “5 sigma” detection limit.

It is possible that as the astronomical data continues to improve, we eventually end up showing that at least one fundamental dimensionless constant is set in stone, to high accuracy. If that is the case, we will have established the strict terms and conditions that any candidate unification theory must comply with.

On the other hand, it is also possible that completely new physics will be revealed. As you read this article, at least one immensely powerful supercomputer is crunching millions of numbers, measuring and re-measuring the physics of gas clouds at cosmological distances from Earth, gradually constructing a picture of physics then, compared to physics now. Forthcoming huge telescopes will concentrate on this specific issue, providing dramatic data quality advances. It is possible that we are inching closer to one of the most important discoveries in the history of science, the detection of an evolving fundamental law of Nature. In this case, a new era in physics will have begun.